Benchmark of Neural Network--Precision,Recall,F1-Measure

Precision

\(Precision=\frac{actually \ true \&\& model \ thinks \ it \ is \ true}{model \ thinks \ it \ is \ true}\)

The percentage of true labels decided by the model are actually true.

Recall

\(Recall=\frac{model \ thinks \ it \ is \ true \&\&actually \ true}{actually \ true}\)

The percentage of true items are decided true by the model.

If we want higher Precision, we should not take any risk--only take those with highest score.

But if we want higher Recall, we should take all possible results. That is, we cannot get Precision and Recall improved at the same time.

So we need a balance benchmark.

F1-Measure/F1-Score

\[ F=\frac{(\alpha ^2+1)PR}{\alpha ^2P+R} \]

We get F1-Measure when \(\alpha=1\).

More on Harmonic Mean--it prevents models that are only good at either of P or R from doing good in F.

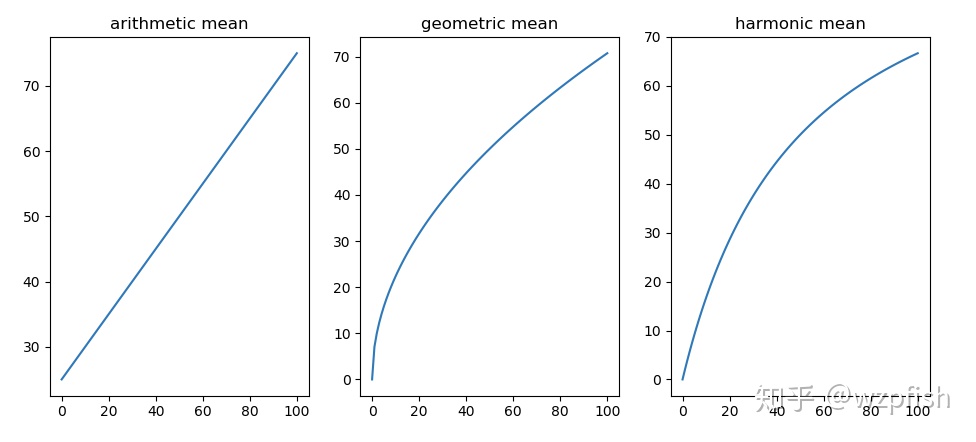

x=50, y=0~100, here is the score (ref):

Harmonic mean tries to get a result that neither P nor R is not so bad even if both of them are not really good.