MultiThreading in Python

Multi-threading

Create sub-thread

1 | import threading |

1 | import threading |

open CMD with admin right. 1

2

3

4reg.exe add "HKCU\Software\Classes\CLSID\{86ca1aa0-34aa-4e8b-a509-50c905bae2a2}\InprocServer32" /f /ve # win10 mode

reg.exe delete "HKCU\Software\Classes\CLSID\{86ca1aa0-34aa-4e8b-a509-50c905bae2a2}\InprocServer32" /va /f # win11 mode

taskkill /f /im explorer.exe & start explorer.exe # reboot machine or run this line

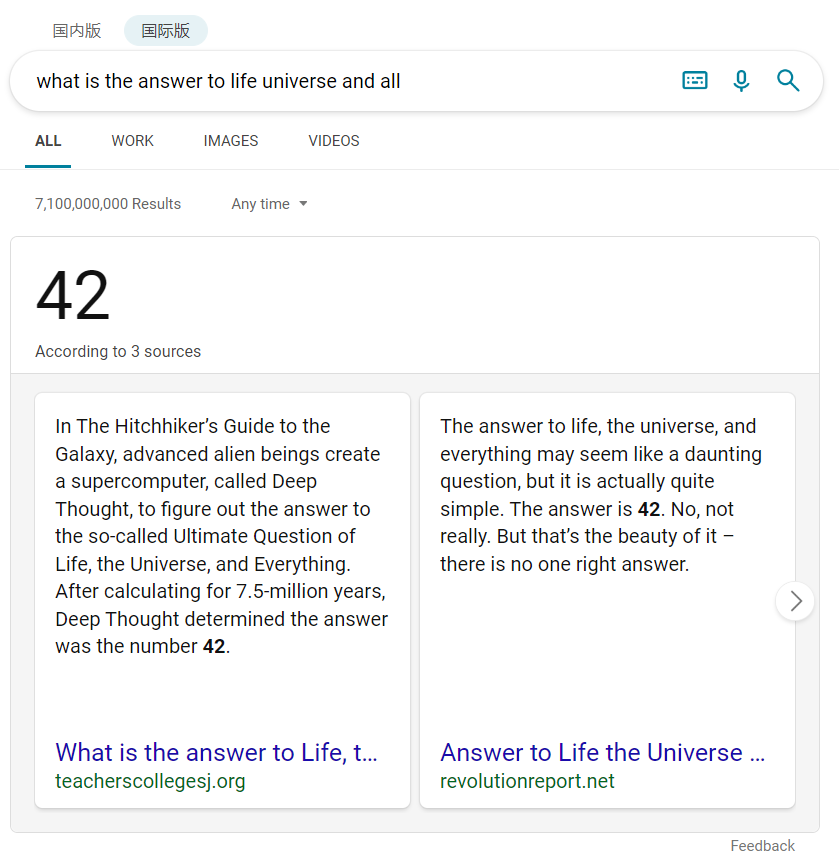

Please set random seed to 42.

install WSL2 in Win10

powershell as administrator.winver to check your Windows version.wsl -l -o to check all available distribution

package.wsl --install -d <distribution> to install

specified Linux version.Remote-WSLinstall Anaconda in WSL2

sudo apt update; sudo apt upgradewget, eg.

wget https://repo.anaconda.com/archive/Anaconda3-2022.05-Linux-x86_64.shconda init)modify the last commit comment:

git commit --amend --message="message" --author="my_name <name@email.com>"

modify historical commit comment:

view your commit history: git log

rebase: git rebase -i <commmit id> or

git rebase -i HEAD~n

change the flag:

If you just want to edit the commit message, choose reword.

modify the message

push git push <origin> <branch> -f. You

have to use the flag -f to push the commit

by force.

Knapsack problem is a constraint optimization problem. You have to find an optimal answer given certain constraints (capacity of knapsack). There are 3 different variants: 0-1 knapsack, fractional knapsack and unbounded knapsack. We will discuss them one by one.

In the 0-1 knapsack problem, we can either keep an item or not. There is no way to split an item. Each item has a value and a weight. The knapsack, or the bag, has a weight capacity. Our target is to fill the bag with maximum value while not exceeding its capacity.

Input: bag weight capacity \(W\),

\(n\) items with value

value[] and weight weight[].

Output: maximum feasible value

NameNode: control the system

StandbyNode: handle logs of NameNode and serves as a backup of NameNode

DataNode: store data

NameNode get request from client->split data->StandbyNode inform NameNode of nodes to store data (workload balance)->NameNode passes the data to the DataNode->DataNode will pass data to the next DataNode (same piece of data will be copied and stored on multiple DataNodes)

NameNode get request from client->StandbyNode inform client where to find data->Client find the data from the nearest DataNode

DataNode: DataNodes will send signal to NameNode via heartbeat mechanism.

NameNode: StandbyNode serves as backup

Difference between Database, Datalake and Datawarehouse.

Database support an application. Any interactive application needs database to store streaming data. Thus database is good at real-time and streaming data ingest.

Datalake stores raw, untouched data from multiple applications. Data lake can support structured, semi-structured and unstructured data. Data lake is not designed for handling real-time data ingest. The frequency of data lake update depends on the frequency of data ETL (Extract, Transform, Load). Data lake provides data for data mining and machine learning.

Datawarehouse is designed for business intelligence. It stores predefined, processed data and only supports structured data and semi-structured data. Datawarehouse provides data for business report and business dashboard.

SQL

select: select columns

select distinct: remove redundant data in columns (reduce the number of rows)

limit: limit the number of results to be displayed

where: limit conditions

like: select string with certain pattern (work with wildcard)

in: select from several values

eg

1 | where column in (value1, value2,...) |

between: select value/string/data between two values

eg

1 | where column between value1 and value2 |

alias: set alias (used in select, where)

order by: show result in certain order

insert into: insert a row with certain columns

eg

1 | insert into table_name (column1, column2, column3,...) |

You do not need to name the columns if the row inserted contains them all.

update: update certain rows

eg

1 | update table_name |

You have to set conditions when using update.

delete: delete certains rows

eg

1 | delete from table_name |

You have to set conditions when using delete.

join: join two several tables

eg

1 | select <columns> |

union: union several table (with same number of columns, same type in each column and same order of columns)

eg

1 | select <columns> from table1_name |

Use all to allow redundant data in the result of

union.

insert into select: copy data from a table and insert to another

eg

1 | insert into table2_name <columns> |

create table: create a new table

eg

1 | create table table_name |

constraints: add constraint to give more rules in table

Table

| not null | unique | primary key | foreign key | check | default |

|---|---|---|---|---|---|

| no null in this column | each value in this column is nuique | set primary key (not null & unique) | set foreign key | each value compromise certain condition | set default value |

primary key: every table should have one and only one primary key.

eg

1 | create table table_name |

foreign key: foreign key is the primary key of another table

check: check certain condition

eg

1 | create table table_name |

or

1 | create table table_name |

index: create index

eg

1 | create <unique> index index_name |

group by: group the results; it should work with aggregate function.

eg

1 | select column1, aggregate_function(column_name) |

having: aggregate function can not work with where

and that is why we need having.

where ->

group by -> having.exist: return true is the result exists

事实表(Fact Table)又称主题表,其本质是记录交易的表,往往维度很大。

维度表(Dimension Table)又称维表,其本质是基础表,类似于事实表的外键,往往维度较少,冗余较大。

例如商品价格表(包含商品名、价格等)是维度表;售货表(包含商品名、顾客名、交易数量等)是事实表。 例如顾客信息表(用户昵称、用户ip等)是维度表;顾客交易表(包含商品名、交易数量等)是事实表。

事实表一般非常大(往往是宽表),不适合使用常规方法查询,因此需要将一些维度剥离出来作为维度表。维度表出现的目的就是为了使事实表能够减少冗余,提高存储效率。

维度表用于关联事实表(其本身可能有冗余信息,可以帮助事实表节省空间),用于数据检索。一个事实表都需要和一个或多个维度表相关联。维度表内的内容往往用于描述事实表中的数据,因此多为静态数据。

维度表和事实表的信息可以聚合在一起形成一个信息全面的表,称之为指标表,又称宽表。在数据中台(middle-end)中使用宽表,可以减轻业务系统压力,同时为前端的服务提供快速的数据查询(相当于把数据压力剥离出来放到独立的系统中)。

大数据中常提到的星形结构、雪花结构等本质就是针对不同的场景需求对表采取了不同程度的拆分用于提升存储或是查询效率。

https://blog.csdn.net/weixin_45366499/article/details/120706308 https://blog.csdn.net/qq_28666081/article/details/104686822